MLC GPU-Accelerated LLM on a $100 Orange Pi

4.7 (524) · € 19.50 · En stock

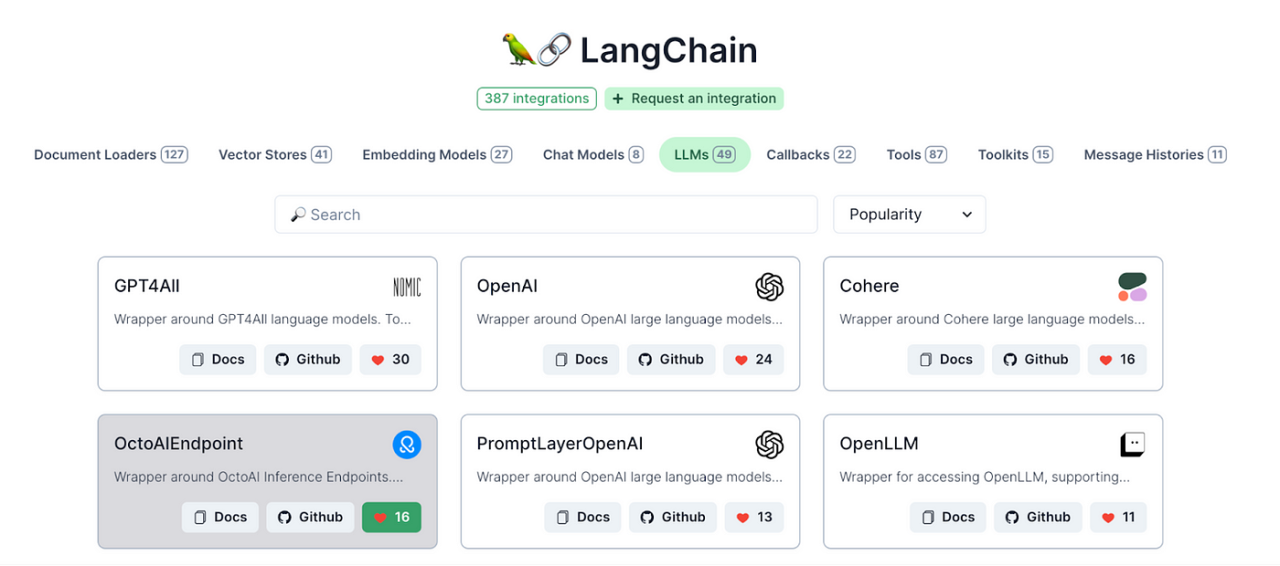

Junru Shao on LinkedIn: MLC LLM now supports running Vicuña-7b on Android phones as its latest…

Junru Shao on LinkedIn: MLC

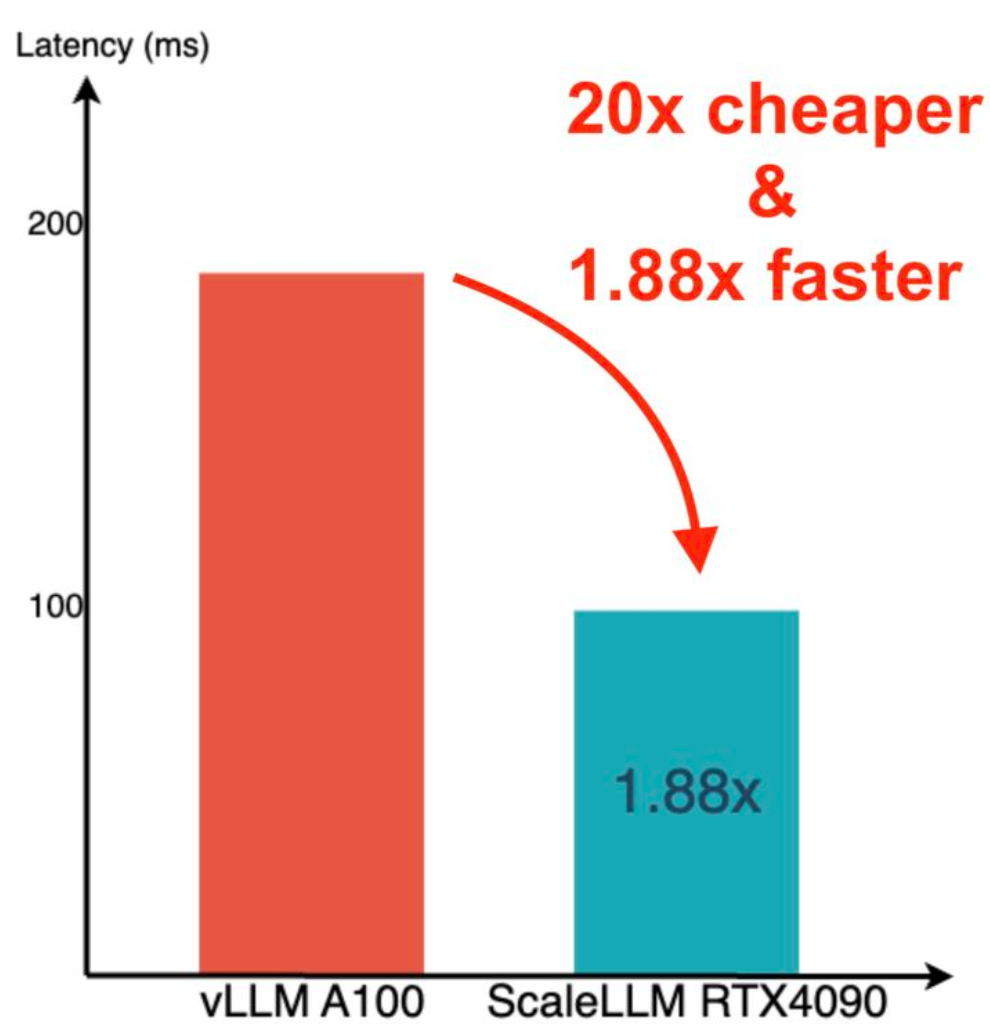

ScaleLLM: Unlocking Llama2-13B LLM Inference on Consumer GPU RTX 4090, powered by FEDML Nexus AI

Bohan Hou (@bohanhou1998) / X

Junru Shao on LinkedIn: MLC

Junru Shao on LinkedIn: Thanks for featuring our work! We believe ML compilation techniques are…

David Evans on LinkedIn: GPU-Accelerated LLM on a $100 Orange Pi

MLC GPU-Accelerated LLM on a $100 Orange Pi

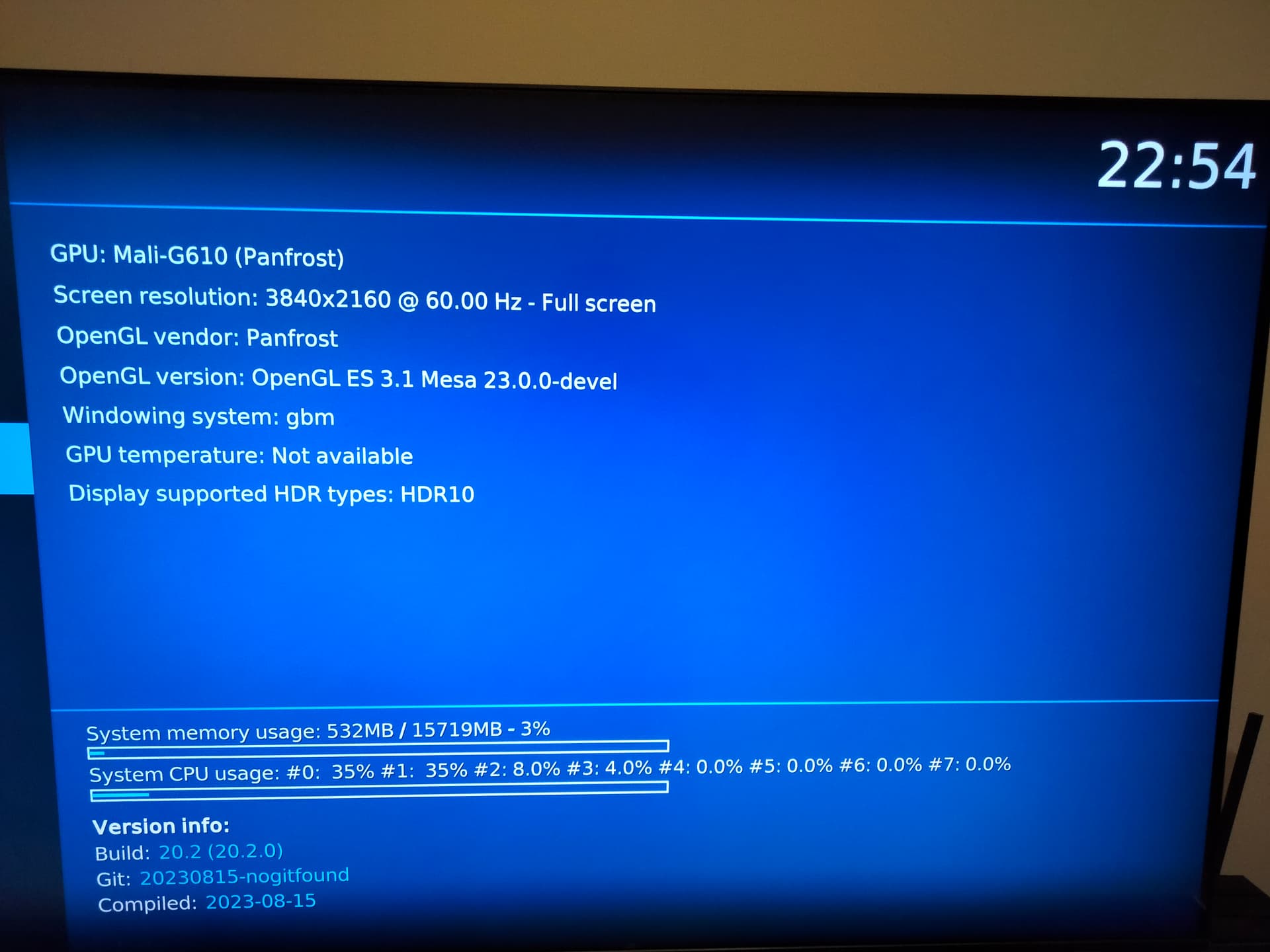

Orange Pi 5 Hardware Acceleration enabled? - General Discussion - DietPi Community Forum

Andrej Karpathy on X: @satish_k maybe something like this? GPU-Accelerated LLM on a $100 Orange Pi $100 Orange Pi 5 => 2.5 tok/s Llama-2 7B / X

MLC Scalable Language Model Inference on Multiple NVIDIA and AMD GPUs

Junru Shao on LinkedIn: Bringing Open Large Language Models to Consumer Devices

Project] MLC LLM: Universal LLM Deployment with GPU Acceleration : r/LocalLLaMA